Machine Learning Question Bank

Machine Learning Module -1 Questions:

- Define the following terms:

- Learning

- LMS weight update rule

- Version Space

- Consistent Hypothesis

- General Boundary

- Specific Boundary

- Concept

- What are the important objectives of machine learning?

- Explain find-S algorithm with given example. Give its application.

-

Example Sky AirTemp Humidity Wind Water Forecast EnjoySport 1 Sunny Warm Normal Strong Warm Same Yes 2 Sunny Warm High Strong Warm Same Yes 3 Rainy Cold High Strong Warm Change Yes 4 Sunny Warm High Strong Cool Change Yes

-

- What do you mean by a well –posed learning problem? Explain the important features that are required to well define a learning problem

- Explain the inductive biased hypothesis space and unbiased learner

- What are the basic design issues and approaches to machine learning?

- How is Candidate Elimination algorithm different from Find-S Algorithm

- How do you design a checkers learning problem

- Explain the various stages involved in designing a learning system

- Trace the Candidate Elimination Algorithm for the hypothesis space H’ given the sequence of training examples from Table 1.

H’= < ?, Cold, High, ?,?,?>v - Differentiate between Training data and Testing Data

- Differentiate between Supervised, Unsupervised and Reinforcement Learning

- What are the issues in Machine Learning

- Explain the List Then Eliminate Algorithm with an example

- What is the difference between Find-S and Candidate Elimination Algorithm

- Explain the concept of Inductive Bias

- With a neat diagram, explain how you can model inductive systems by equivalent deductive systems.

- What do you mean by Concept Learning?

Machine Learning Module-2 Questions

- Give decision trees to represent the following boolean functions

- A ˄˜B

- A V [B ˄ C]

- A XOR B

- [A ˄ B] v [C ˄ D]

- Consider the following set of training examples:

-

Instance Classification a1 a2 1 + T T 2 + T T 3 - T F 4 + F F 5 - F T 6 - F T - (a) What is the entropy of this collection of training examples with respect to the target function classification?

(b) What is the information gain of a2 relative to these training examples?.

-

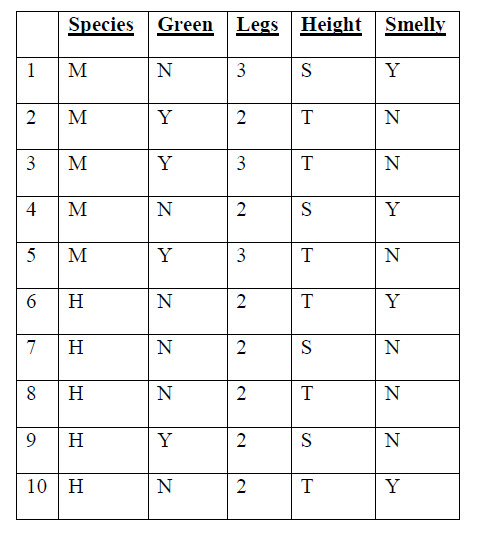

- NASA wants to be able to discriminate between Martians (M) and Humans (H) based on the following characteristics: Green ∈{N, Y} , Legs ∈{2,3} , Height ∈{S, T}, Smelly ∈{N, Y}

- Our available training data is as follows:

- Our available training data is as follows:

a) Greedily learn a decision tree using the ID3 algorithm and draw the tree .

b)

(i) Write the learned concept for Martian as a set of conjunctive rules (e.g., if (green=Y and legs=2 and height=T and smelly=N), then Martian; else if ... then Martian;...; else Human).

(ii) The solution of part b)i) above uses up to 4 attributes in each conjunction. Find a set of conjunctive rules using only 2 attributes per conjunction that still results in zero error in the training set. Can this simpler hypothesis be represented by a decision tree of depth 2? Justify.

4.Discuss Entropy in ID3 algorithm with an example

5.Compare Entropy and Information Gain in ID3 with an example.

6. Describe hypothesis Space search in ID3 and contrast it with Candidate-Elimination algorithm.

7. Relate Inductive bias with respect to Decision tree learning

8. Illustrate Occam’s razor and relate the importance of Occam’s razor with respect to ID3 algorithm.

9. List the issues in Decision Tree Learning. Interpret the algorithm with respect to Overfitting the data.

10. Discuss the effect of reduced Error pruning in decision tree algorithm.

11. What type of problems are best suited for decision tree learning

12. Write the steps of ID3Algorithm

13. What are the capabilities and limitations of ID3

14. Define (a) Preference Bias (b) Restriction Bias

15. Explain the various issues in Decision tree Learning

16. Describe Reduced Error Pruning

17. What are the alternative measures for selecting attributes

18. What is Rule Post Pruning

Machine Learning Module-3 Questions.

1) What is Artificial Neural Network?

2) What are the type of problems in which Artificial Neural Network can be applied.

3) Explain the concept of a Perceptron with a neat diagram.

4) Discuss the Perceptron training rule.

5) Under what conditions the perceptron rule fails and it becomes necessary to apply the delta rule

6) What do you mean by Gradient Descent?

7) Derive the Gradient Descent Rule.

8) What are the conditions in which Gradient Descent is applied.

9) What are the difficulties in applying Gradient Descent.

10)Differentiate between Gradient Descent and Stochastic Gradient Descent

11)Define Delta Rule.

12)Derive the Backpropagation rule considering the training rule for Output Unit weights and Training Rule for Hidden Unit weights

13)Write the algorithm for Back propagation.

14) Explain how to learn Multilayer Networks using Gradient Descent Algorithm.

15)What is Squashing Function?

Machine Learning Module-4 Questions

1) Explain the concept of Bayes theorem with an example.

2) Explain Bayesian belief network and conditional independence with example.

3) What are Bayesian Belief nets? Where are they used?

4) Explain Brute force MAP hypothesis learner? What is minimum description length principle

5) Explain the k-Means Algorithm with an example.

6) How do you classify text using Bayes Theorem

7) Define (i) Prior Probability (ii) Conditional Probability (iii) Posterior Probability

8) Explain Brute force Bayes Concept Learning

9) Explain the concept of EM Algorithm.

10)What is conditional Independence?

11) Explain Naïve Bayes Classifier with an Example.

12)Describe the concept of MDL.

13)Who are Consistent Learners.

14)Discuss Maximum Likelihood and Least Square Error Hypothesis.

15)Describe Maximum Likelihood Hypothesis for predicting probabilities.

16) Explain the Gradient Search to Maximize Likelihood in a neural Net.

Machine Learning Module-5 Questions

1. What is Reinforcement Learning?

2. Explain the Q function and Q Learning Algorithm.

3. Describe K-nearest Neighbour learning Algorithm for continues valued target function.

4. Discuss the major drawbacks of K-nearest Neighbour learning Algorithm and how it can be corrected

5. Define the following terms with respect to K - Nearest Neighbour Learning :

i) Regression ii) Residual iii) Kernel Function.

6.Explain Q learning algorithm assuming deterministic rewards andactions?

7.Explain the K – nearest neighbour algorithm for approximating a discrete – valued functionf : Hn→ V with pseudo code

8. Explain Locally Weighted Linear Regression.

9.Explain CADET System using Case based reasoning.

10. Explain the two key difficulties that arise while estimating the Accuracy of Hypothesis.

11.Define the following terms

a. Sample error b. True error c. Random Variable

d. Expected value e. Variance f. standard Deviation

12. Explain Binomial Distribution with an example.

13. Explain Normal or Gaussian distribution with an example.

Machine Learning Question Paper VTU

Cloud Computing Question Papers

Categories

VTU Updates

- VTU NON-CBCS Results New

- SSP Scholarship 2023 New

- Cloud Computing vtu question papers New

- Machine Learning Syllabus New

- 18CS71-AiML VTU Question Papers New

- Machine Learning VTU Question Papers New

- Web Technology Syllabus New

- VTU change of college Procedure New

- VTU MTech Syllabus New

- VTU MBA Results New

- VTU Notes New

- VTU PhD TimeTable New

- VTU Academic Calendar 2023 Odd Sem

- VTU Updates New

- Infosys Recruitment 2022 New

- Cyber Security Syllabus New

- MBA in USA for Engineering Students New

- Contact Us